On our journey to creating a great search tool for audience intelligence, we realized that the data we use to measure influence is fatally flawed in a number of ways. Our SparkScore tool was built to help measure true engagement+reach (as opposed to mere following). But the other big problem is fake followers — accounts that exist on Twitter, technically follow accounts and might even engage, but aren’t operated by real people or are no longer active (and, therefore, not influence-able).

Today, we’re releasing a new free tool to help anyone discover what percent of an any Twitter account’s followers are fake vs. real: The Fake Followers Audit.

The tool is designed to estimate the percentage of a given account’s followers that fit one or more of the following buckets:

- Spam accounts (those that purely send spam tweets)

- Bot accounts (those that have no real human actively operating them)

- Propaganda accounts (those designed to propagate dis/misinformation)

- Inactive accounts (those that no longer use Twitter or see tweets)

This tool considers a “fake” follower to be someone you cannot truly reach — an account that has no reachable person *following* you. There may be a real human who set up the account at one point, but if none of your tweets will ever be seen by them, they’re not a true follower. For the purposes of influencing people, that account may as well not exist. Thus, we apply the adjective, “fake,” to all of these groups.

For example, here’s Google’s Jeff Dean, senior fellow at the search giant, whom I’m fairly certain has never bought any spam accounts or participated in any schemes to get fake followers.

The relatively high percent of “fake” followers is likely a few things – 1) Jeff is followed by a lot of bots that automatically RT or save or collect data from what he shares 2) Jeff is on a lot of lists that bots follow (some likely for totally legitimate reasons) and 3) Lots of accounts of Twitter are simply inactive, and since Jeff is a suggested account to follow if your interests at signup are around computer science, machine learning, etc. he’s received a lot of followers who rarely if ever log into Twitter or use the service.

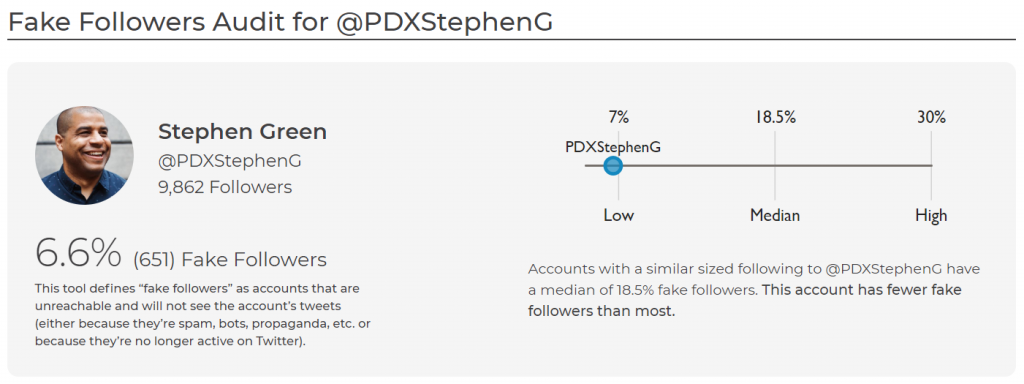

For comparison, here’s Stephen Green, community director at WeWork Portland, whose account has one of the lowest fake following numbers we’ve seen:

Stephen’s following is different from Jeff’s in a number of ways — fewer followers, more engaged & active followers, and fewer followers who come from automated suggestions or lists.

The chart below is shown on every audit (this is the one for @randfish):

We lean toward the liberal side of things, only applying the label “low quality” (aka “fake”) if an account looks highly suspicious and triggers many of the spam signals correlated with fake accounts. In our testing, we were able to identify ~65% of spam/bot/inactive accounts accurately, with extremely few false positives.

How Does The Scoring Work?

We consider an account fake if it triggers 7-10 (or more) of the signals you can see in the tool:

Our model for identifying fake accounts comes from a machine learning process run over many tens of thousands of known spam (and known real) Twitter accounts. The gory details are below for those interested:

In July of 2018, we bought 35,000 fake Twitter followers from 3 different vendors of spam/bot accounts. Our vendors sent those followers to an empty Twitter account created in 2016 that had 0 followers in July 2018. It took ~3 weeks to deliver the 35,000 followers and every day for those 3 weeks we collected data on the followers.

In addition to those 35,000 known spam accounts, we took another random sample of 50,000 non-spam accounts from SparkToro’s database of 4 million accounts. This gave us a total of 85,000 accounts to run through machine leaning on AWS.

Those 85,000 accounts were split into two groups with a mix of SPAM and non-SPAM accounts. Group A as the training set, and Group B as the testing set to analyze performance of the models.

The following data was used for the initial model generation:

- Profile image

- Profile URL

- Verified account status

- Language

- Tweet language

- Account age in days

- Length of bio

- Number of followers

- Number of account they follow

- Days since last tweet

- Number of tweets

- Number of times the account appears on lists

- Location

- Display name

After a model was found to fit the data, we analyzed the correlations to determine features that closely correlate to spam. While nothing was a 1 to 1 correlated to detect spam there were a good number of features that showed promise. The following were the strongest correlated to SPAM accounts:

- Profile image – accounts lacking these are often spam

- Account age in days – certain patterns are clearly spam-correlated

- Number of followers – spam accounts tend to have very few followers

- Days since last tweet – many spam accounts rarely if ever send tweets

- Number of times the account appears on lists – spam accounts are almost never on lists

- Display name – certain keywords and patterns correlate strongly with spam

These, however, are not alone, and other signals that have decent correlation with spam (especially when multiple signals apply to a single account) were also useful to build a functioning model. Through trial and error (and, of course, pattern-fitting) we crafted a scoring system that could correctly identify over 65% of the spam accounts.

It’s crucial to keep in mind that no one factor tells us that an account is spam! The more spam signals fired, the more likely an account is to be spam, so our system uses 7-10+ flags before grading an account as “low quality,” or fake.

How Can You Apply These Numbers?

If you’re analyzing a Twitter account to determine its true reach and influence, both SparkScore (which measures real engagement on tweets) and the Fake Follower Audit can be extremely useful. Some accounts that appear on the surface to have many followers (or even strong engagement) may in fact be followed by a large percent of fake accounts. An account with 100,000 followers with 20% fake can reach many more people than an account with 200,000 followers at 70% fake.

A couple weeks ago, I asked on Twitter for examples of known accounts with high numbers of fake followers, and received a lot of great responses:

The Fake Followers Audit was shockingly good at corroborating most of the examples brought up by folks in that thread, e.g.

- @JoeMande – 39.4% fake followers

- @Demokrat_TV – 69.2% fake followers

- @Narendramodi – 54.6% fake followers

- @Geertwilderspvv – 65.5% fake followers

- @PhilJCook – 63.5% fake followers

- @BDUTT – 36.6% fake followers

- @KatyPerry – 40.6% fake followers

- @Rogerkver – 39.5% fake followers

- @Matteosalvinimi – 53.3% fake followers

- @Nigel_Farage – 35.8% fake followers

- @tina_kandelaki – 82.0% fake followers

The folks on Twitter definitely have a good sense for whose followings are fake vs. real 🙂

Our Next Steps at SparkToro

Casey and I expect this will be the last free tool we launch before shifting our full focus to the SparkToro Audience Intelligence product (which we hope to have out the first half of next year). We’re excited to validate the methodology for SparkScore and to add in the capability to filter for fake followers. Our goal is to be across many networks and the web as a whole in the final tool (not just Twitter), but using Twitter for validation has been really helpful.

If you have feedback on this, or SparkScore, please leave a comment or tweet to @randfish and @caseyhen. Thanks!