Generative AI, and ChatGPT in particular have been catnip to the tech press, the mainstream media, and the conversations of professionals in nearly every field. How is it going to disrupt your work?! Will AI replace you? Are Hollywood writers, real estate agents, dog walkers, and anesthesiologists even useful anymore?

In concert with the fine folks at Datos, whose opt-in, anonymized panel of 20M devices (desktop and mobile, covering 200+ countries) provides outstanding insight into what real people are doing on the web, we undertook a challenging project to answer at least some of the mystery surrounding ChatGPT.

Investigation #1: Is ChatGPT Use Increasing?

If you’ve been reading breathless takes like ChatGPT Forecasts Use to Grow 900% or ChatGPT Achieves in Six Months What Facebook Needed a Decade to Do, you’d be forgiven for assuming that the token-prediction text system has what every VC investor lusts after: J-Curve growth. But, more discerning observers might have caught Honest Broker’s recent post: Ugly Numbers from ChatGPT Reveal that AI Demand is Already Shrinking or the WSJ‘s and Washington Post‘s similar coverage.

First, the high-level look at OpenAI.com’s traffic:

Since May, OpenAI traffic has declined 29.15%. There are a variety of theories that explain this without suggesting that generative AI interest or use is actually declining:

- Theory A) Professional/regular use is still at an all-time high or growing; it’s only new users/those-checking-it-out-with-no-specific-tasks that are falling off

- Theory B) A huge amount of use is connected to schoolwork, and educational users in the US, Canada, and other countries with summer vacations is responsible for the decline mirage

Datos’ clickstream panel collects every URL visited by the millions of devices that have opted into their panel, and from these, we can see not just monthly traffic to OpenAI, but how many visits these devices make. That should help us validate or rule out Theory A.

I asked Datos to provide us with a breakdown of all panel traffic to OpenAI by visits/month since September of last year (2022). That distribution is visualized below:

Here we can see that 1-2X/month visitors have, indeed, fallen since May. That group also saw a big decline after December (when breaking news about ChatGPT 3’s capabilities spurred meteoric growth in first-time usage). But the bones of Theory A doesn’t hold up against an analysis of devices with 3-10 visits/month or devices with 11+ visits/month. Both of those show marked declines since May. In fact, the decline of 11+ visit/month devices has been happening since April!

That leaves us with the second theory: educational users are responsible for the decline. This is already a tough sell given the falling numbers since April, but since the process of uncovering answers reveals so much more about ChatGPT’s use, let’s continue digging in.

Investigation #2: What Tasks Do People Ask ChatGPT to Solve?

Are educational use-cases driving ChatGPT’s adoption? Are we raising a generation of students who use AI to complete most office-work tasks? Or, conversely, is ChatGPT answering questions that replace Google Search, eliminate the need for software programmers, or perhaps filling in for overworked storytellers at the role-playing game tables?

To answer these questions, Datos provided SparkToro with more than 7,000 real user prompts from ChatGPT, which we then filtered to the most credible/relevant 4,098 (removing prompts that had only a couple words or nonsense gibberish/emojis/nothing-but-foul-language/etc.). The results are fascinating.

First, I’ll break down the # of prompts per session:

We can see above that ChatGPT’s users are almost evenly split (1/3rd each) between single prompt, 2-4 prompt, and 5+ prompt sessions. But, this type of analysis doesn’t tell us what folks are doing with those prompts, and since Datos is able to deliver the full text of the ChatGPT pages, we analyzed these (using one of the best topic classification systems available: ChatGPT itself).

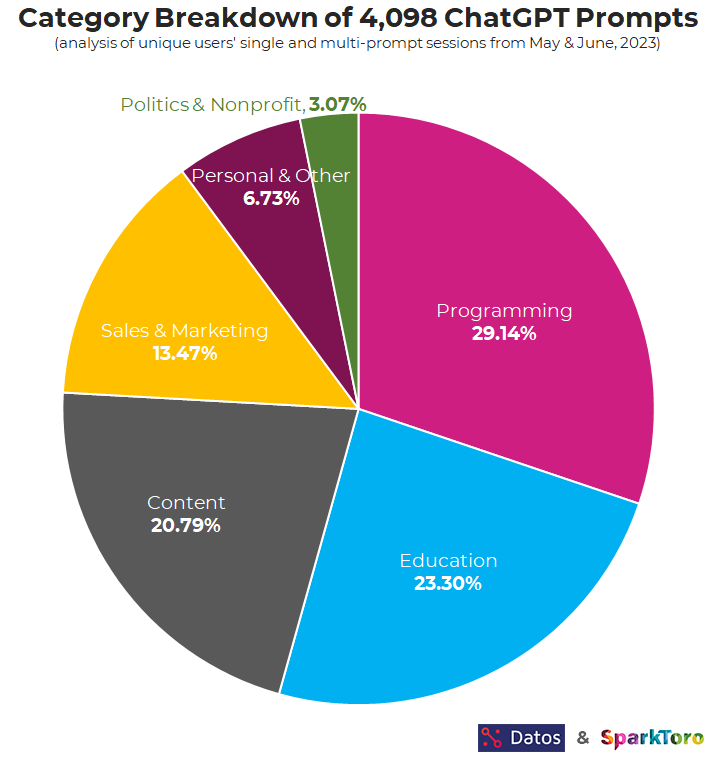

I first asked ChatGPT to provide granular classifications, then took the most common of these (only ~20 accounted for 95%+ of the 4,098 unique prompts) and manually bucketed them into top-level categories, which you can see above. Programming is the largest use-case, with 29.14% of all prompt series falling into this use-case. It’s also the clearest/least ambiguous. I hand-checked 100+ of each prompt series (an arduous, but fascinating task) to confirm the classifier’s accuracy, and programming help (with writing specific bits of code, formatting code, catching errors in code, and more) was present in every one ChatGPT marked as such.

As others have often pointed out, the tool excels at programming-related tasks. Little wonder it’s such a popular use-case.

Next up is education — but not just primary or secondary education. Personal knowledge or interest pursuits and professional knowledge for work purposes are both included here as well. Same with content creation — some is clearly personal (D&D dungeon masters needing riddles or quests for their adventures was a recurring favorite in the dataset) while others are professional (“write me a 500 word blog post about detroit plumbing problems” – presumably a content marketer tired of writing their own material).

Sales and marketing use-cases overlap with content creation, but I chose to keep these separate to help see only those sessions that could only be classified as helping sales+marketing professionals with their tasks (analysis of analytics, questions about which channels to promote their products in, ad optimization tasks, and even messaging/promotion help were all in the dataset).

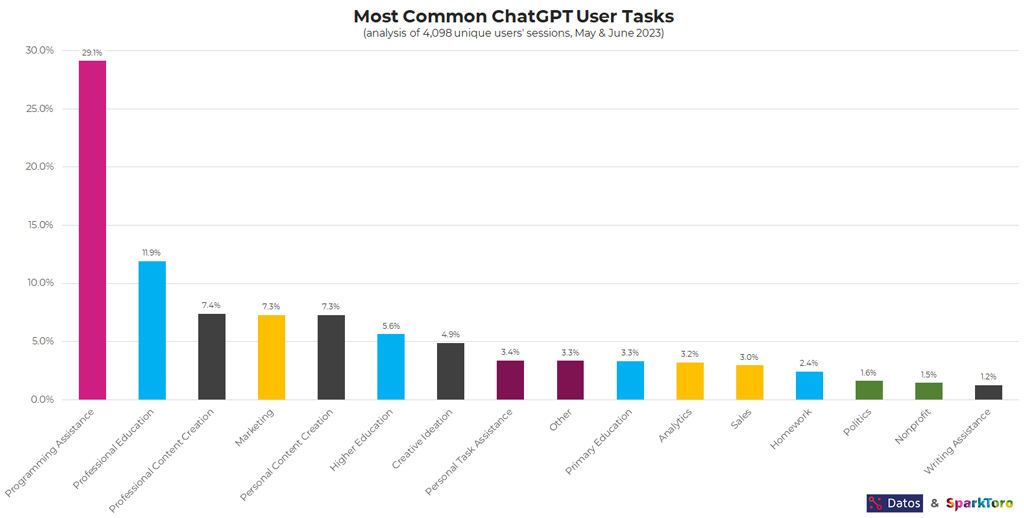

To put a finer point on this investigation, I’m providing the near-full breakdown of subcategories (save a few that were highly overlapping/subjective that I’ve combined):

I’ve used color-coding from the pie chart above to make this breakdown more scannable, e.g. “writing assistance,” “personal content creation,” “creative ideation,” and “professional content creation,” are all color-coded gray since they fit under the broader “Content” use-case.

Higher ed, primary ed, and homework were all subcategories ChatGPT classified sessions into, and together, these make up ~10% of all use cases. That’s not enough to account for the ~29% decline in traffic from April/May to July, and thus, I think we can put a nail in the coffin of Theory B.

I also found it fascinating to analyze some of the most common words in ChatGPT prompt sessions. For those curious, I’ve included a visualized chart of those below:

“Write,” “Create,” and “List,” are probably obvious verbs for ChatGPT prompts. But, finding “SEO” in 2.39% of all prompt sessions? Shocking! Seeing “Game,” in 4.66% was another surprise. Not featured in this breakdown, but still fun and interesting (at least to me) were:

- Judge 0.61%

- SaaS 0.56%

- Pricing 0.54%

- Curriculum 0.46%

- Employment 0.44%

- Employer 0.39%

- Attorney 0.37%

- Lawyer 0.37%

- Tweet 0.34%

- Movie 0.32%

- DnD (or D&D) 0.17%

- RPG 0.15%

Like I said, a surprising number of role-playing storytellers use ChatGPT. Maybe the Hasbro/Wizards of the Coasts folks should consider that for the next upgrade on DnDBeyond 🙂

A huge thank you to Eli, Serge and the entire team at Datos, as well as DataSci101‘s scientist-in-chief, Britney Muller, and Vayda CTO, Scott Haug, without whom this study would not have been possible.

For those curious about the data provider for this set of millions of visits and thousands of analyzed prompt series:

Datos is the leading Data-as-a-Service (DaaS) provider of privacy compliant clickstream datasets in the world. They offer event level and aggregated data feeds for most any online behavioral action imaginable, including, but not limited to, visitation, search, video consumption, and purchase.

In fact, we’ve been so impressed by Datos panel that SparkToro V2 (which Amanda teased earlier this week) will be employing their phenomenal data as one of the three pillars in the product’s architecture.