In July and August of this year, SparkToro solicited (via Twitter, our email newsletter, LinkedIn, Facebook, and Instagram) voluntary sharing of Google Analytics data from the web marketing community. Over 1,000 participants shared their websites’ traffic (through GA’s oAuth function) with us. We then acquired metrics from four providers of traffic estimate data—SEMRush, Datos, SimilarWeb, and Ahrefs*—and compared these against Google Analytics’ reported numbers. In addition, we gathered Moz’s Domain Authority metric and Google Trends’ branded search volume estimate. All of these metrics used the same reporting period: the 12 months from June 2020 – June 2021.

(*After collecting these metrics and publishing this post, it was pointed out that Ahrefs’ traffic estimate does not attempt to be inclusive of all traffic sources, only organic search visits, which we did not attempt to capture or measure accuracy on. Apologies!)

Our goal was twofold:

- To uncover a reliable, third party provider whose traffic numbers had high correlation and low variance with GA numbers so we could (hopefully) but that data and put it into SparkToro’s audience research product.

- To share our findings about the relative accuracy of each metric with the marketing community so other marketers and analysts could make better use of this information in their own competitive analyses.

First, the bad news—today, we don’t believe any of these traffic estimates are accurate enough to include in SparkToro. I’m hopeful that changes in the future, and that maybe this project nudges providers to improve.

Second—the good news. After extensive data-gathering, wrangling, MySQL-and-Excel wrestling, we’ve got a lot of interesting numbers to share with y’all. Let’s get into it.

Which 3rd-Party Estimate is Most Often Within +/- 30% of Google Analytics’ Users?

Choosing a singular way to splice and present this data is folly, which is why you’ll find a half dozen methodologies presented in this post. But, if I were to pick my favorite, and the one I’ll be referring to most in the years ahead (until someone does a larger scale study), it would be this chart:

Open a new window with the full-sized image

To get results that are the most generous to the 3rd-party traffic estimate providers, we did four things:

- We chose to use Google Analytics’ Users metric, which purports to measure what the product once called “unique visitors.” We found that this metric lines up best with the 3rd-party providers, even those whose estimates are for total visits/all sessions.

- We used the following metrics from each provider (which correlated best with any of the GA metrics we tried): SEMRush’s “visits,” pulled from their tool’s web interface with the following settings: (root domain), (all devices), (worldwide), (deviation range: OFF); Ahrefs’ “traffic performance” (including all subdomains), (monthly volume), (avg. organic traffic); Datos’ monthly sessions (all traffic, sent to use by the Datos team); SimilarWeb’s “total visits” (worldwide; sent to us by the SimilarWeb team); Google Trends’ “Average Interest Over Time” collected from the web interface; and Moz’s “Domain Authority” and “Linking Root Domains” count, (pulled from their API).

- 1,053 websites shared traffic with us, but we cleaned this dataset significantly before running our analyses. We excluded sites for which the 3rd parties had no data, and any that appeared to have gaps or errors in their GA-reported traffic (e.g. a missing or partial month, perhaps from removing the GA collector from their website or a subset of pages).

- We also removed a few dozen outliers from the remainder, which brought correlations and ranges of accuracy into better alignment. For the data and graphs below, 641 websites were used, giving us 7,692 unique data points to compare (12 months X 641 sites).

In the chart above, each of the four data providers is represented alongside the % of times their traffic metric was within 30% of Google Analytics’ reported “Users” for the month. Importantly, we’ve also segmented the websites by how much traffic they receive, using six total buckets:

- Sites that average 250,000+ GA users/month (46 of the 641 sites analyzed)

- Sites that average 100,000-250,000 GA users/month (39 of the 641 sites analyzed)

- Sites that average 50,000-100,000 GA users/month (91 of the 641 sites analyzed)

- Sites that average 25,000-50,000 GA users/month (96 of the 641 sites analyzed)

- Sites that average 5,000-25,000 GA users/month (186 of the 641 sites analyzed)

- Sites that average <5,000 GA users/month (183 of the 641 sites analyzed)

We believe these results provide a transparent and useful picture. This set of sites is large enough that if we were to 10X or even 100X the sample size (i.e. gather 100K+ websites’ GA data), the final numbers would likely be similar.

Notably, SimilarWeb is the clear winner with one exception: their traffic estimates on small websites (<5,000 monthly visitors according to GA) were the worst of the bunch. If they’d done better here, the rest of the analysis would likely have shown them the runaway leader, but as you go through the other measurements of accuracy we’ve applied, you’ll see that answer gets muddier.

Correlations Between 3rd-Party Metrics and Google Analytics Users

The second metric we’re presenting is one that most stats folks will find familiar: correlation coefficient. If you’ve been following my work for a long while, you might recall that I’ve presented correlations before between things like Google rankings and Moz’s metrics (back when I was still working at that company).

This analysis is somewhat different.

Why? Because all the 3rd-party providers and Google Analytics (against which we’re comparing) are attempting to measure the same thing: web traffic. There’s no oppositional machine-learning metrics vs. an algorithm with hundreds or thousands of inputs. The chart below simply answers the question: How well does SEMRush, Datos, SimilarWeb, and Ahrefs’ traffic metrics compare against those collected by Google Analytics on the same websites, over the same timeframe.

The above chart shows the raw correlation between the various providers and Google Analytics’ reported Users metric for each month. The range is 0 (no correlation) to 1.0 (perfect correlation), and across the 7,692 months of data from 641 sites, SEMRush performs best of the bunch at 0.790, followed closely by Datos at 0.720, then SimilarWeb at 0.659, and Ahrefs at 0.504 (note that Ahrefs is measuring organic search traffic only; when they conducted a correlation analysis with that metric from Google Search Console, it came out to ~0.75).

We chose to also include Moz’s Domain Authority and Google Trends’ average interest over time metrics for those curious. Neither purports to measure search traffic, but both are still correlated, and we know marketers sometimes use them as proxies for relative traffic levels. This report might help clarify how useful they can be for that purpose (i.e. not very, you should probably switch to one of these others four providers if traffic estimation is your goal).

But, correlation is just one way to measure the performance of these metrics. To continue our journey into the value of these metrics, we think many folks will want to know “how far off might these 3rd-party numbers be?”

Traffic Estimate Ranges for 3rd-Party Metrics

In the charts below, you’ll see a positive and negative number for each data provider. These represent the error bars, i.e. the maximum amount that each provider over or underestimated traffic to the websites’ GA numbers. Because of the size of the ranges, this first graph looks only at the first bucket (sites with more than 250K GA Users/month):

This next graph compares all four providers across the five smaller traffic buckets:

In this data, we see some fascinating differences between the providers. A few that stand out to me are:

- Ahrefs’ impressively small errors bars for sites with <50K GA Users/month

- SimilarWeb’s strength with sites between 5K-100K GA Users/month

- The massive error bars at the top end of the traffic spectrum, which make it very hard to trust anyone’s numbers for large websites

These aren’t averages of the delta between providers’ numbers and GA’s; they show maximums and minimums. The correlation chart above is a better answer to the “how far off are any 3rd-party’s numbers directionally across the dataset?” while these charts answer the question “how far off might the numbers be?”

As you can see, that answer is often +/-100% or more, meaning a 3rd-party might say that website XYZ received 50,000 visits in June, but it actually got 5,000 or 100,000. Accuracy is generally better on smaller and midsize sites, but even there, variance can be massive.

How Often Do 3rd-Parties Over vs. Under-estimate Traffic?

The final question we attempted to answer was whether the various traffic estimates were usually off in one direction or another. The graph below uses all six buckets of traffic across each of the four providers to comprehensively answer that question.

Some interesting findings here:

- Ahrefs is almost always underestimating (which makes sense – they’re only measuring organic search traffic, not all traffic)… until you get to the <5K GA Users/month bucket, where they’re almost always overestimating.

- Datos and SimilarWeb are fairly balanced between over and under-estimates

- SEMRush is much more often over than under

This doesn’t tell us how close the estimates are, but thankfully, we’ve covered that in the approaches above.

Takeaways from this Research

My hope, going into this project, was to uncover a provider (or several) whose data was trustworthy, if imperfect. My go-to the last few years has been SimilarWeb (they have a terrific interface, and I’ve liked their data for other projects), but I also think very highly of Eli Goodman and the team at Datos as well as Tim Soulo and his compatriots at Ahrefs.

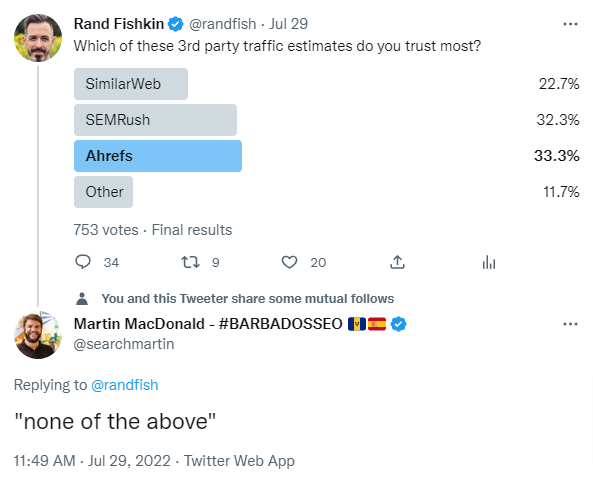

But, at least when it comes to estimating how much traffic a website gets in a given month, or whether it’s going up or down, based on this project, I’ve gotta go with Martin MacDonald on this:

For some ranges of traffic, some providers are pretty good. But, no 3rd-party estimate today is consistently accurate enough to place high confidence in their numbers. Correlations aren’t awful. Error ranges aren’t either (except on the most-trafficked sites). These aren’t random guesses, they’re obviously backed by decent data sources and processes. IMO, we’re just not there yet.

How do I intend to use this data personally?

- For larger websites, I’ll continue to stick with SimilarWeb’s estimates. They’re better than anyone else’s, and almost 2/3rds of the time (~63%) they get traffic numbers right to within +/-30%.

- For small websites, Datos has the strongest numbers (though only by a hair). Since they’re a very new provider (<2 years old), I’m hopeful that in a few years, if we redo this report, they’ll be the leader.

- I gotta say I’m shocked at how poorly Google Trends brand search interest correlates with traffic. Of all the surprises, that’s the biggest one. Big action item for me: stop using Google Trends to predict how popular a website is. Turns out some brands that are hot don’t get much traffic to their site and some sites with barely any brand interest get plenty.

- It’s hard to tell from their websites if Ahrefs and SEMRush are trying to estimate overall traffic, or just search traffic. If it’s the latter, SEMRush in particular may want to consider getting into the competitive analysis game. Their numbers look solid. With Ahrefs, I’m hopeful they’ll improve, and will certainly be cheering for ’em. (UPDATE: See the epilogue below for comments from Ahrefs – they *only* try to estimate search traffic, not all traffic)

- Overall, I’m going to be more skeptical of any numbers I see from 3rd parties for now. I like simple, clear, accurate metrics in our product (and so does Casey), so we’re going to stand by our % of audience metric and not add traffic estimates yet.

How you apply it to your marketing practices is up to you; my takeaways might not be yours, and that’s OK.

Epilogue: Could Machine Learning Models Help?

One approach smart data science folks might suggest here is using a combination of the metrics from the 3rd-party providers in concert with other, reasonably correlated metrics, using a machine-learning model trained on this dataset. Could that produce a high quality estimate?

Short answer: no.

We tried using AWS’ machine learning library to build a model based on the data in this research and came up with the results you see above, i.e. not great, and barely an improvement on the 3rd-party metrics providers’ raw numbers.

Casey (my cofounder) consulted with some experienced folks in the ML world, whose suggestions all amounted to the same thing: “garbage in, garbage out,” i.e. using these 3rd-party estimates plus data like Google Trends’ average search interest and Moz’s Domain Authority (we also used linking root domain counts in case links helped predict traffic) just wasn’t enough. In fact, it didn’t prove to be much better than the best of the 3rd-party providers’ numbers for any given segment. Bummer.

UPDATE: Tim Soulo from Ahrefs responded, noting that Ahrefs’ traffic estimate is only inclusive of organic search traffic, rather than all sources.

I’m glad we got to include them in this analysis, but it’s likely unfair to Ahrefs (who’s published an analysis measuring their own organic search traffic accuracy). I’ve made a note in the introduction about this as well.

P.S. I originally hoped to share an anonymized spreadsheet of the traffic data and 3rd-party metrics, but sadly realized that this could quickly expose sites’ identities (find a monthly traffic number in SEMRush, Ahrefs, or SimilarWeb and ctrl+f in the spreadsheet and you’d rapidly know if we used that site’s numbers). Given that we’ve promised anonymity and never to share anyone’s traffic data outside this study, that’s off the table.

A huge shoutout to the hundreds of folks who trusted us with their Google Analytics connections, and made this study possible. Those names include the following (though these websites were not necessarily the ones they shared with us): SEM Dynamics LLC, Formuladesk, ADEC Innovations Group, Sheer Tea, OneCharge, Austin Data Labs, Out of Bounds Communication, ProSites, Improve & Grow, LLC, Reisememo, Beth Floyd/Roe Digital Inc., Möbius Partners, Lunio, The Escape Game, FOURA by Fourasoft, AEON Law, DesignPlus, Tom Andrews Internet Marketing, Blissbook, Perk Brands, Bar Stool Gems, Degreechoices.com, Ethical Clothing, SchneiderB Media, OBLSK, Trading Post Fan Company, Aptuitiv / Eric Tompkins, MGIS, points N places, Searcht, Nomads Nation, Cemoh, MoreConvert, Darrell Wilson, Fintalent, Ahmad Hayat, David Wogahn, Caroline Summers, Iván Fanego, MC WooCommerce wishlist plugin, Baron Boutique, Umbraco, Definition, STOICA, Cheltenham Rare Books, Nomadic Advertising, Bidnamic, Iris Gems, Phillip Luebke and Olympus Technical Services, Resolution, Adwordizing, Robert Katai and Creatopy, Lee Frost, The Sinsemillier, Felted Cashmere, Seer Interactive, Bigwave Marketing, Kenneth Corrêa, Kukarella, Faraaz Jamal and Mikra, TenScores, and Nicolai Grut. Thank you all!