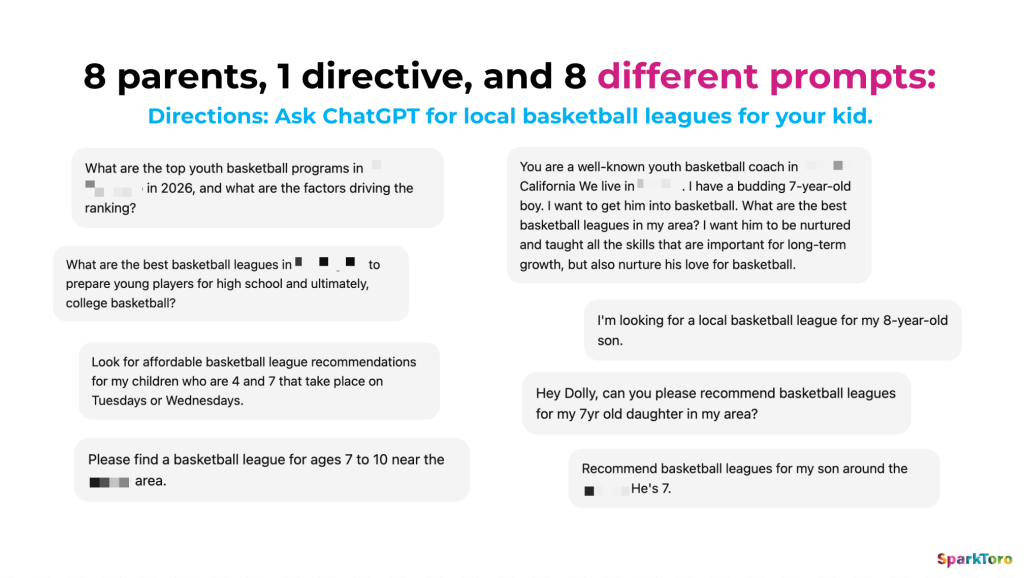

Recently, I ran a tiny experiment. I asked eight mom friends — people very similar to me socioeconomically and with kids around the same age — to look up local basketball leagues for their kids. My only instructions were to search as though they really wanted a good answer. (And of course, “good” is subjective.)

Every single one of them did it differently.

One mom asked ChatGPT to role play and explained the qualities she’s looking for in a league:

“You are a well-known youth basketball coach in [region]. We live in [city]. I have a budding 7-year-old boy. I want to get him into basketball. What are the best basketball leagues in my area? I want him to be nurtured and taught all the skills that are important for long-term growth, but also nurture his love for basketball.”

Another focused on location:

“Please find a basketball league for ages 7 to 10 near the [ZIP code] area.”

One of them gave ChatGPT a name but didn’t specify her area (lol):

“Hey Dolly, can you please recommend basketball leagues for my 7yr old daughter in my area?”

And, for what it’s worth, here’s my prompt: “Can you recommend some not competitive basketball clubs or leagues?”

Same (general) intent. Same life stage. Wildly different prompts.

This has stuck with me because it perfectly mirrors what we found in SparkToro’s recent large-scale research on AI recommendations.

In our AI visibility study with Gumshoe, we asked hundreds of people to write prompts that expressed the same underlying intent. When we analyzed those prompts, the results were striking. People almost never phrased their prompts in similar ways. The average semantic similarity score across human-written prompts was 0.081 — meaning the wording varied dramatically, even when everyone wanted the same type of answer.

And this is just normal human behavior. People don’t reduce intent to a neat set of keywords. They explain. They qualify. They add context. They describe constraints. They personalize the question based on their own experience.

In other words: people don’t “search” anymore. They ask. And increasingly with LLMs, they… talk.

Same Intent, Different Words — Similar Results

Many AI visibility tools often unintentionally inherit assumptions from keyword-based search:

- There’s a small, representative set of prompts worth tracking

- Phrasing is relatively stable

- Optimizing for a handful of queries gets you most of the way there

But both the SparkToro/Gumshoe research and my tiny mom-basketball experiment show why that assumption falls apart. If ten people with the same need phrase their question ten different ways, then tracking visibility on one or two “ideal” prompts tells you very little about how often you’re actually showing up for real people.

This is why AI visibility can feel confusing or unreliable. The input side of the system is far more diverse than marketers can predict or control. But there’s something reassuring in the SparkToro/Gumshoe study: despite massive variation in how humans wrote their prompts, AI tools often returned similar clusters of brands across those phrasings. The wording and/or order changed, but the set of recommendations frequently overlapped.

One solid takeaway from this: AI models are generally good at recognizing underlying intent, even when the surface language looks very different.

For marketers and founders, this reframes the problem. The question isn’t “which exact prompt should I optimize for?” It’s “am I showing up reliably across the full semantic neighborhood of this intent?” That’s a meaningfully different challenge — and a more tractable one.

An Audience Research Problem, Not a Measurement Problem

This is why I keep coming back to the same conclusion: AI visibility is an audience research problem before it’s a measurement problem.

If you want to appear in AI answers, you don’t start by asking which prompt to track, where you rank in ChatGPT, or even where you rank in the Googles. A better starting point is asking yourself or your team:

- How do people actually describe this problem?

- What constraints do they mention?

- What words do they use when they’re unsure, overwhelmed, or comparing options?

- How does their language change based on experience level, confidence, or urgency?

And most importantly: How am I providing answers to these questions, online, in language that matches how real people talk?

For us at SparkToro, getting mentioned by the LLMs wasn’t the strategy. What we’ve done has always been to educate people about audience research, make it as easy as possible for marketers, and to get them to associate audience research with us — your friends at SparkToro. It’s why you can read everything on our website, watch our past Office Hours webinars on YouTube, and listen to or watch Rand and me on various podcasts evangelizing audience research and doing good marketing. Through this strong focus on content creation, distribution, and PR, AI visibility was the outcome.

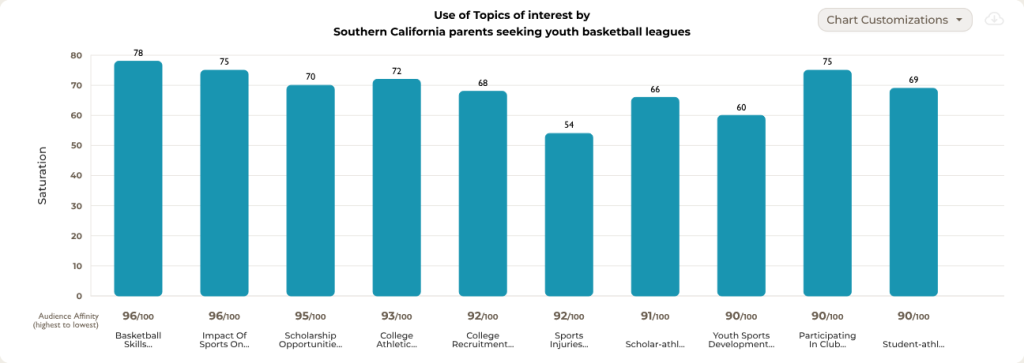

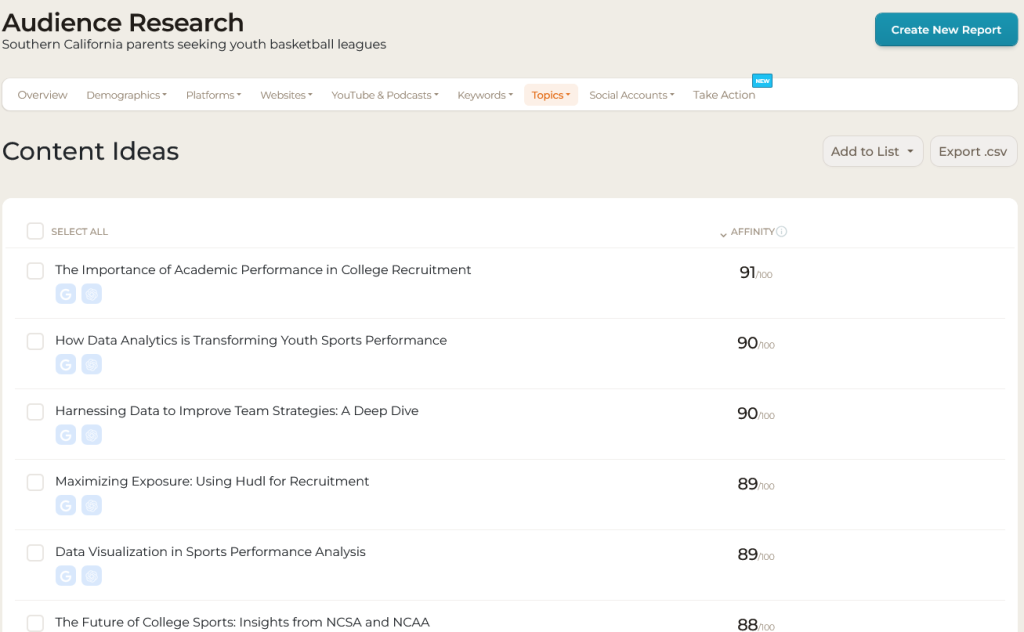

Teams who already invest in audience research — listening to real language in forums, reviews, social posts, support tickets, and conversations — and tie the knowledge to their marketing and business programs have a structural advantage here. They’re not just building a better content strategy. They’re building the kind of distributed, interconnected, real-language presence that AI models draw on when forming recommendations. Blog posts that link to each other. Transcripts in YouTube videos. Mentions in Subreddits. Customer support resources that are easy to find and parse. Earned media that puts the brand in the right context.

Ok, So Now What?

Once you’ve done the audience research, the job becomes translating it into content. If you discover that your customers describe their problem as “I don’t know where to start” rather than “I need a solution for X,” that’s not just a persona insight — that’s a phrase to build content around. Write the blog post. Answer the forum question. Create the comparison guide. Get that language indexed and crawled across the web in ways that position you as the obvious answer.

The eight prompts from those eight parents reflected slightly different mental models of the same task. The mom who was looking for a nurturing league was optimizing for a different thing than the mom who gave a ZIP code. But they were all looking for an age-appropriate basketball league. They just had their slightly different filters and their own ways of communicating. (Hello, Dolly!)

If your AI visibility strategy assumes people ask questions the same way, it’s already misaligned with reality. But if you’ve spent real time understanding how your audience talks, you’re building something that works with the way AI models actually operate.